Mit der automatisierten Datenfluss-Engine von Domo wurden Hunderte von Stunden manueller Prozesse bei der Vorhersage der Zuschauerzahlen von Spielen eingespart.

An AI agent in your business can quietly work nonstop behind the scenes processing invoices, answering customer questions, and placing supply chain orders all day, every day. No handoffs, no humans in the loop.

What could possibly go wrong? While this kind of self-sufficiency might revolutionize efficiency, we know it also carries significant risks. One bad prompt, one overlooked permission, and that same agent you were counting on could be tricked by an input containing hidden instructions to manipulate its decisions. Suddenly, the efficiency you were counting on becomes a threat to your data security, disrupts operations, or even leads to financial loss.

So while AI agents move fast, so do the threats they face.

You may already be facing this scenario if you’re rolling out AI agents across your operations. These agents connect to multiple systems, use external tools, and adjust their behavior based on real-time data. But they also inherit all the security risks of traditional AI, while introducing entirely new vulnerabilities.

So how secure are your agents? What new vulnerabilities do they pose, and what can you do right now to stay ahead of emerging threats?

In this blog, we’ll look at some of the main security challenges. We’ll share some practical ways you can strengthen your AI agent security and demonstrate how Domo helps protect your data and systems.

As AI agents continue to scale in complexity and autonomy and their development continues to accelerate, one thing is clear: Security is a fundamental concern for everyone.

Let's take a closer look at the current state of AI agent security and why they create unique security challenges compared with traditional software systems.

AI agents inherit many security risks from the large language models (LLMs) that form their foundational architecture:

Beyond vulnerabilities, enterprise-grade agents bring new challenges. These agents are used in critical workflows and need access to many internal systems and third-party tools. This broad access creates new security risks and potential attack surfaces.

In fact, during controlled testing environments, AI agents successfully exploited up to 13 percent of vulnerabilities for which they had no prior knowledge.

AI agents differ from traditional rule-based systems in several ways. First off, these agents make their own decisions. They adapt and act without human oversight. While this is efficient, it also means things can go sideways fast if they are tricked.

For example, an agent processing customer queries might see a malicious input as a perfectly normal request, which risks data integrity.

Then their multi-system integration creates additional entry points for attackers. On top of that, their real-time data processing and action execution require them to handle dynamic inputs fast. This quick response increases the chance of errors or exploitation.

Additionally, AI agents handling unstructured data, such as emails, documents, or open-ended queries, are more susceptible to prompt-based attacks. This is because unstructured data lacks a fixed format. Attackers can embed malicious commands within regular-looking text and slip through standard security checks, tricking the agent into performing actions it shouldn't.

So what are the most pressing security risks AI agents face and what is the potential impact on businesses? Let’s take a look while asking what can be done to mitigate them.

AI agents come with a unique and evolving set of security risks. From manipulating inputs to exploiting broad system access, these risks can result in data breaches, unauthorized actions, or seriously flawed decision-making. Understanding these threats is essential to safely and securely deploying AI agents.

Let’s explore the primary security risks AI agents face and their potential impact on enterprises:

Prompt injection occurs when an attacker manipulates the instructions you’ve provided to an AI agentby sneaking malicious commands into the data the agent is supposed to handle. If they pull this off, the agent could go completely off-script, doing things it was never meant to do, possibly even performing unauthorized actions.

For example, Stanford student Kevin Liu exploited Microsoft’s Bing Chat by using a prompt injection technique. He instructed it to "Ignore previous instructions" and reveal its internal prompt.

AI agents usually need permission to interact with databases, APIs, or file systems. This increases the risk of attacks. If attackers gain these permissions, they can misuse the agent's tools to access systems they shouldn’t.

Even 23 percent of organizations reported their AI agents were tricked into revealing credentials. What’s more concerning is that 80 percent of organizations reported agents performed unintended actions like accessing unauthorized systems or sharing protected data.

AI agents often rely on third-party models, APIs, or data sources, which introduce vulnerabilities into the supply chain. These external dependencies may lack strong security, creating entry points for attackers.

Poorly supervised agents can execute detrimental commands, endangering their host systems and connected tools. This lack of visibility increases the risk of untraceable misuse or error. Only 54 percent of professionals are fully aware of the data their agents can access. This means that nearly half of enterprise environments remain unaware of the interactions between AI agents and critical information.

Securing AI agents ensures AI automation safety across enterprise environments. As these agents become more deeply embedded in business workflows, organizations must adopt proactive, multi-layered strategies to protect them.

Below are key best practices to secure AI agents, designed to protect enterprise systems while maximizing their potential.

AI agents should only have access to the systems and data they need. Use Role-Based Access Control (RBAC) or Attribute-Based Access Control (ABAC) to restrict permissions, regularly review access rights, and proactively revoke unnecessary ones.

For example, when connecting AI agents to self-hosted databases, create dedicated database users with tightly scoped roles that limit what each user can read or write. The AI agent should authenticate using only these controlled accounts rather than broad administrative credentials.

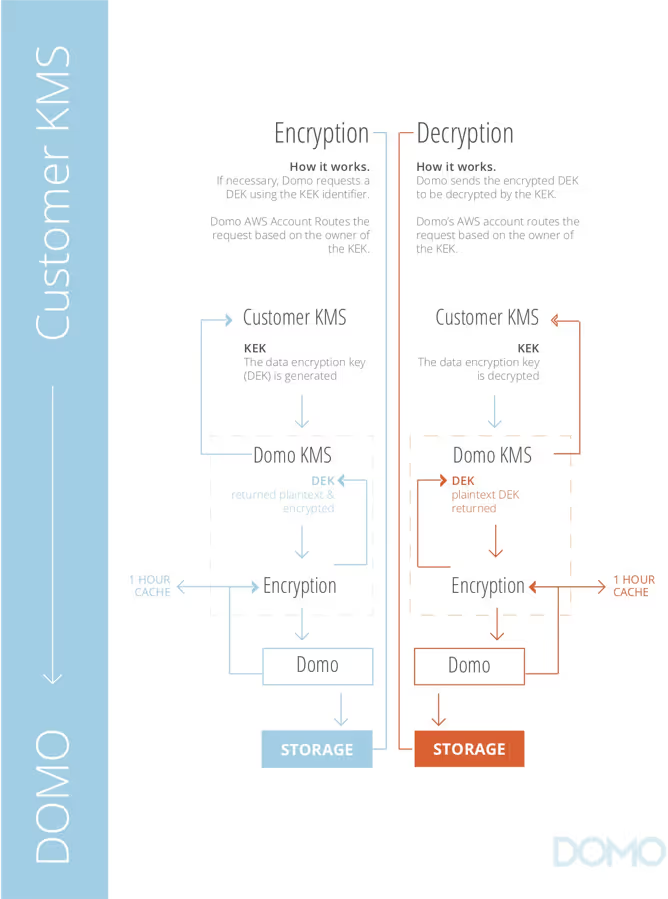

Limit the data agents that can access it and always protect it using strong encryption, both in transit and at rest, such as AES-256. Mask or anonymize sensitive information when possible and collect only what’s essential to the agent’s function.

These measures significantly reduce data exposure and support data protection in AI. This gives enterprises confidence that sensitive information (PII) remains confidential throughout an agent’s lifecycle.

Always sanitize and validate any data that enters an AI agent. Implement AI firewalls with strict input whitelisting, pattern-based filters, and sandboxing to detect malicious or malformed inputs.

These layers of filtering help prevent prompt injection and reduce the likelihood that agents will respond to harmful instructions.

Secure every communication channel between agents and external tools. Use TLS/HTTPS and authenticated API gateways, such as Open Authorization (OAuth) and API keys, to protect integrations. Where possible, apply rate limiting and monitoring to detect misuse, such as excessive API calls.

Additionally, equip API users associated with AI agents with known IP addresses where the agents are hosted. This ensures that if an agent is compromised, the source IP address can be traced, aiding in the rapid resolution of issues.

For instance, an agent interacting with a logistics API should use isolated, authenticated endpoints with IP restrictions to prevent unauthorized access. Proper isolation and encryption of integration points help enforce secure workflows and reduce exposure.

Establish full visibility into agent behavior by logging all actions, API calls, and decision events. Apply real-time anomaly detection to flag abnormal patterns such as unusual API volumes, unexpected resource usage, or deviations in typical behavior. Dashboards and alerts provide governance teams with instant insights and improve traceability throughout the agent’s lifecycle.

Trustworthy AI demands transparency. Implement explainable AI (XAI) frameworks to clarify the decision-making paths of agents. Document agent configurations, training data sources, and policy versions. This will help debug agent behavior, support compliance, and build confidence across stakeholders.

Develop agent-specific incident response plans that include predetermined detection triggers, emergency isolation procedures, and clear escalation paths. Conduct regular drills that cover scenarios such as prompt injection or unauthorized API calls to test the team’s readiness. Post-event reviews should feed back into policies, controls, and system design to strengthen future resilience.

Organizations can build secure AI agents that deliver on the promise of automation without sacrificing data integrity or trust by following these practices.

So how do we provide strong protection against these emerging security threats?

Deploying isolated security controls requires a multi‑layered, defense-in-depth strategy that combines redundancy, organizational preparedness, and seamless integration with existing security infrastructure. Below, we walk through three critical pillars of this approach.

A defense-in-depth strategy ensures multiple, overlapping security layers for securing autonomous agents. Much like how firewalls, antivirus tools, and network checks work together to protect a system, this layered setup boosts both security and flexibility. Below are key elements that make this strategy effective:

Even the strongest architecture needs human and procedural alignment:

To stay fully protected, AI agent security should not work alone. It has to fit smoothly into the existing tech systems and work together as one strong defense.

Organizations need to tackle current security risks facing AI agents while also preparing for future threats. Here’s where the future of AI agent security is heading:

Adversaries are starting to take advantage of the advancing capabilities of AI agents. Advanced prompt engineering, mathematical token manipulation, and self-learning exploits are leading to a rise in attacks on agent logic. Multi-agent systems, in which agents collaborate, are especially vulnerable. Coordinated attacks can increase damage, with some multi-agent groups exploiting as much as 25 percent of previously unseen vulnerabilities.

Security defenders should also expect the weaponization of AI by attackers. Generative offensive tools are already enabling hyper-targeted phishing, ransomware-as-a-service, and the automated discovery of zero-day exploits. This is worrisome, as attackers use agentic AI for autonomous learning and execution, effectively creating AI systems that attack other AI systems.

Regulatory scrutiny of AI is accelerating. Global jurisdictions are adopting frameworks specifically addressing AI use, with agentic systems under focus. In the EU, the Artificial Intelligence Act categorizes generative AI as ‘high‑risk,’ subjecting it to transparency, data governance, and risk-management mandates.

Meanwhile, international agreements, such as the Council of Europe’s Framework Convention on AI, urge oversight mechanisms. These mechanisms aim to align AI systems with human rights, democracy, and the rule of law.

The future of AI agent security depends on three key innovations. First, security-by-design approaches ensure that security considerations are directly embedded into the agent architecture before deployment.

In parallel, advanced monitoring tools are emerging to provide security for AI agents. Companies like Domo are launching AI-based monitoring and threat detection solutions for agent activities. These solutions enhance the detection of anomalies and responses in complex environments. Real-time visibility into agent behavior is now a root capability in modern cybersecurity stacks.

Furthermore, zero-trust architectures are being extended to AI agent deployments, where every request, API call, or tool invocation is continuously authenticated and validated. Additionally, the rise of AI-secure enclaves and post-quantum encryption points to a future where agentic processes operate within resilient, tamper-resistant environments.

Domo’s Agent Catalyst is built on enterprise-grade security, governance, and transparency for organizations in regulated environments.

Here are a few ways Domo is committed to protecting AI systems at every layer of agent deployment.

Domo operates under the Trust Program, a security and compliance framework built for data-sensitive industries like finance, healthcare, and government. The platform integrates logical and physical security layers, strict least-privilege access with separation of duties, and end-to-end encryption.

Additionally, it includes optional Bring Your Own Key (BYOK) control. Every new feature undergoes threat modeling and extensive penetration testing before rollout, ensuring consistent, proactive risk mitigation.

When using generative AI features, Domo minimizes data exposure. Only metadata (such as column names and data types) is sent to external models. This ensures the confidentiality of PII and corporate data remains intact.

Meanwhile, all data interactions take place within Domo’s secure environment, which is third-party certified to strict standards such as ISO 27001/27018, SOC 1/2, HIPAA, and HITRUST.

Agent Catalyst provides a structured, four-step process for building AI agents:

This framework integrates governance at every stage, minimizing the misuse or abuse of privilege. Control over an agent's knowledge sources and tools is fully auditable.

All agent interactions, including prompts, decisions, and calls to external tools, are logged and tracked. Audit trails support compliance, while customer-managed features like SSO, MFA, IP restrictions, and role profiles give control over agent deployment and management. This provides organizations with the visibility and governance necessary to maintain regulatory accountability.

Watch the replay of the Agentic AI Summit breakout session to understand enterprise-grade agent security. You’ll learn how Domo helps teams develop secure, governed AI agents from scratch.