Saved 100s of hours of manual processes when predicting game viewership when using Domo’s automated dataflow engine.

Have you ever tried to make decisions based on data, only to find it’s all over the place? That’s because data isn't just automatically ready for decision-making. If we don’t take the time to prepare it, it can be fragmented, inconsistent, and difficult to trust.

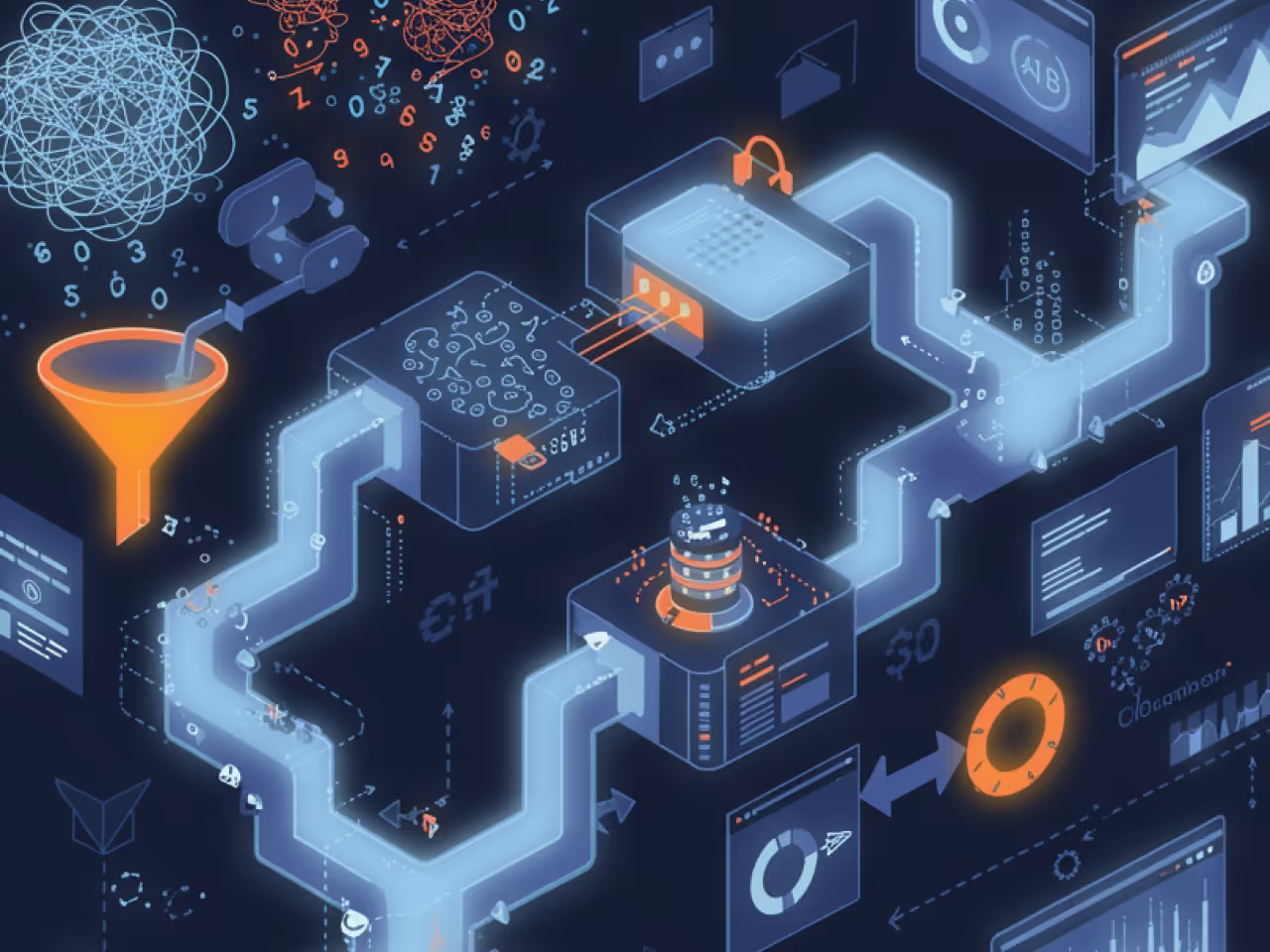

That's where data wrangling comes in—it transforms your raw, unfiltered information into organized, accurate data sets that help inform better business insights and strategies. The term "wrangling" is used because, kind of like handling a large, unruly herd, dealing with raw data often demands teamwork, thoughtful strategy, and hands-on problem-solving to keep everything under control.

If we ignore data wrangling, we risk making costly mistakes. Poor-quality data can lead to flawed analysis, missed opportunities, and lost revenue. Without proper cleaning and structuring, even the most sophisticated analytics tools won't deliver reliable outcomes.

In this guide, we'll walk you through what data wrangling is, the key steps involved, real-world examples, and the tools that can help streamline the process. By understanding these fundamentals, you’ll be able to turn data a powerful assetrather than a liability.

Data wrangling is about taking the raw, messy data and turning it into something clean and structured we can use for analysis and decision-making. It’s a process where we ultimately discover, clean, structure, enrich, and validate the data, getting it ready to be used effectively.

Organizations collect huge amounts of data from different sources, like websites, customer interactions, and internal systems. But raw data rarely arrives in a neat package. Without preparation, it can lead to misleading results, wasted time, or flawed decisions.

The "wrangling" aspect captures the hands-on nature of this work—cleaning, shaping, and managing data so that it behaves in predictable, useful ways. Without it, companies risk making strategic decisions based on incomplete or inaccurate information.

Beyond operational efficiency, clean and accessible data directly impacts a business's bottom line. Unfortunately, 67 percent of organizations say they don’t completely trust their data used for decision-making.

Companies that invest in cleaning, managing, and transforming their data not only reduce waste but also boost customer satisfaction by enabling better personalization, faster response times, and more informed service delivery. In a fast-paced market, the ability to trust and quickly act on data can make the difference between leading the competition and falling behind.

While data wrangling can vary depending on the project, most workflows follow a similar structure.

Before making any changes, take time to explore the data set. Ask questions like:

Common challenges:

Best practices:

Example:

A retail company collects online sales data across multiple regions. During discovery, they realize that some regions store customer addresses in separate fields (street, city, zip), while others combine them into one text block.

Cleaning involves fixing or removing errors, inconsistencies, or inaccuracies.

Typical cleaning tasks:

Common challenges:

Best practices:

Example:

An e-commerce company is analyzing customer feedback surveys and noticing inconsistencies in state names, which are sometimes spelled out ("California") or abbreviated as "CA" or "Calif."

Sometimes, data needs to be reshaped or reorganized to meet analytical needs.

Tasks can include:

Common challenges:

Best practices:

Example:

A company wants to analyze customer buying patterns over time, but its sales data stores "Date of Purchase" in a text field instead of a true date format.

Enhancing a data set can make it even more valuable for decision-making.

Typical enrichment activities:

Common challenges:

Best practices:

Example:

A marketing team has a database of customer email addresses and wants to personalize campaigns based on location. However, the current data only includes zip codes.

Validation ensures that transformations were applied correctly and the data remains trustworthy.

Validation activities include:

Common challenges:

Best practices:

Example:

After merging sales and customer support data sets, a SaaS company notices that some customer IDs are missing in the merged table.

Once validated, the cleaned and structured data needs to be made available for analysis.

Publication activities include:

Common challenges:

Best practices:

Example:

A financial services firm prepares quarterly reports for leadership. Historically, each team pulled its own numbers, leading to conflicting metrics.

Data wrangling isn’t just a theoretical exercise—it’s a practical process that organizations use every day to turn scattered, messy data into clear, actionable insights. The following real-world examples show how leading companies identified messy or disconnected data problems, applied wrangling techniques, and achieved measurable business results.

As you review these cases, notice how common steps like cleaning, structuring, enriching, validating, and publishing data made a meaningful impact across industries.

DHL operates one of the largest logistics networks in the world, generating massive volumes of shipment, delivery, and warehouse data daily. Before wrangling their data, critical metrics were buried across disconnected systems, making real-time operational decisions difficult.

Using Domo, DHL unified its operational data into a single environment, cleaned inconsistent location codes, standardized timestamps, and enriched shipment records with real-time tracking updates. Validation steps ensured consistent reporting across the network. As a result, DHL empowered teams with faster, more accurate insights, leading to improved delivery times and enhanced customer satisfaction.

La-Z-Boy faced challenges managing siloed information across sales, manufacturing, and customer service departments. Each system stored data differently, making it hard to connect the dots across the customer journey.

Through a coordinated data wrangling initiative, La-Z-Boy cleaned and standardized product and customer data, structured historical and live data sets for easier access, and enriched records with customer satisfaction metrics. After validating the unified data sets, they deployed self-service dashboards, giving employees at all levels the ability to act on trusted data. This transformation accelerated decision-making and improved operational agility across the business.

Cisco’s marketing organization collected extensive campaign, engagement, and sales data. However, inconsistencies in field names, outdated formats, and duplicate records created bottlenecks that slowed global reporting efforts.

With Domo, Cisco automated data cleaning processes (standardizing fields like country codes and customer statuses), structured campaign data to map directly to sales outcomes, and enriched internal data with external firmographic insights. Rigorous validation protocols ensured data quality before publishing dashboards. The result: faster marketing performance insights, better alignment between sales and marketing, and stronger global scalability.

Data wrangling is a crucial first step in transforming raw data into meaningful insights. By carefully exploring, cleaning, structuring, enriching, validating, and publishing your data, you lay the groundwork for confident decision-making, better strategies, and measurable results.

The term "wrangling" captures the reality: real-world data rarely arrives in perfect form. It often requires taming, cleaning, and reshaping before it can truly drive business value. Organizations that invest in wrangling gain a critical advantage—they spend less time cleaning up messes and more time uncovering growth opportunities.

But you don’t have to tackle data wrangling challenges alone. Domo empowers businesses to connect, clean, transform, and manage data seamlessly—all within a single platform. Whether you're unifying siloed systems, automating cleaning tasks, or scaling real-time analytics, Domo helps you move from messy data to meaningful action faster.

Learn how Domo simplifies data wrangling and drives better insights.