Saved 100s of hours of manual processes when predicting game viewership when using Domo’s automated dataflow engine.

Since ChatGPT came out, more AI companies like Anthropic have been rushing to build language models that can chat and handle tasks almost like humans. They’ve grabbed attention and billions in investment along the way.

And yet, for all that rushing, most enterprises are still figuring things out. According to a recent McKinsey report, 92 percent of companies plan to increase their AI investments over the next few years. But with all that spending, only 1 percent of businesses have fully ingerated AI at scale. Why? While LLMs are powerful, they can be costly and complicated to operate if not approached strategically.

This is where small language models (SLMs) are changing the game. Instead of defaulting to “bigger is better,” businesses are asking a better question, “What model is right for the job at hand?” SLMs often deliver similar results while using far fewer resources, making them a wise option.

In this article, we’ll go over what SLMs are, look at small language models examples, and explain how they are faster, focused, and easier to govern than LLMs. We will also discuss their role in the future of agentic AI, and how Domo’s Bring Your Own Model (BYOM) strategy makes SLMs’ adoption seamless.

Let’s start with the basics by defining what a small language model is.

A small language model works like a large language model by processing and generating human language, but with fewer parameters. They are called “small” because they have much less complexity than models like GPT-4.1 or Claude 4. While LLMs can have hundreds of billions or even trillions of parameters, SLMs usually operate with a few hundred million to a few billion.

LLMs are designed for broad, general-purpose use cases and often require significant compute resources. In contrast, SLMs focus on being efficient and targeted for specific tasks, making them faster, lighter, and easier to govern.

Here’s a quick breakdown of common SLM sizes:

Now, let’s explore when SLMs might be the right choice compared to larger models.

In many cases, small language models offer a smarter, more targeted alternative to large models, especially when efficiency and control matter most.

Patrick Buell, chief innovation officer at Hakkoda, clarifies the strategic choice during the Agentic AI Summit: “SLMs are like ants carrying grains of sand efficiently in an anthill, while LLMs are elephants—powerful but often overkill for specific enterprise tasks.” For most well-defined business processes, you don’t need an elephant; you want a coordinated team of ants.

With an understanding of when to use an SLM, let’s go over how SLMs can achieve performance similar to LLMs without compromising effectiveness.

Building small language models aims to retain as much power as possible while making the model smaller and more efficient. They share the same transformer architecture as LLMs for understanding context, but use compression techniques to optimize. These techniques help “shrink” a model without “breaking” its core functionality.

Here are the common shrinking methods.

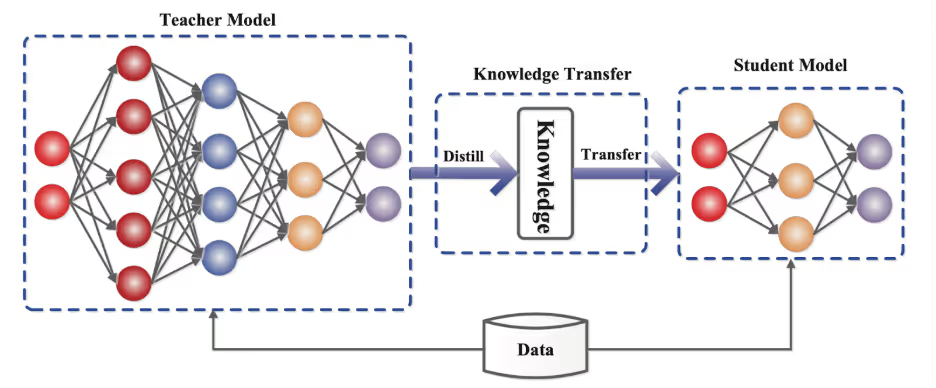

Knowledge distillation (KD) involves a smaller “student” model that learn from a larger, pre-trained “teacher” model. The teacher model goes through a large amount of data and figures out all the important patterns. Then, the student model learns directly from the teacher’s insights.

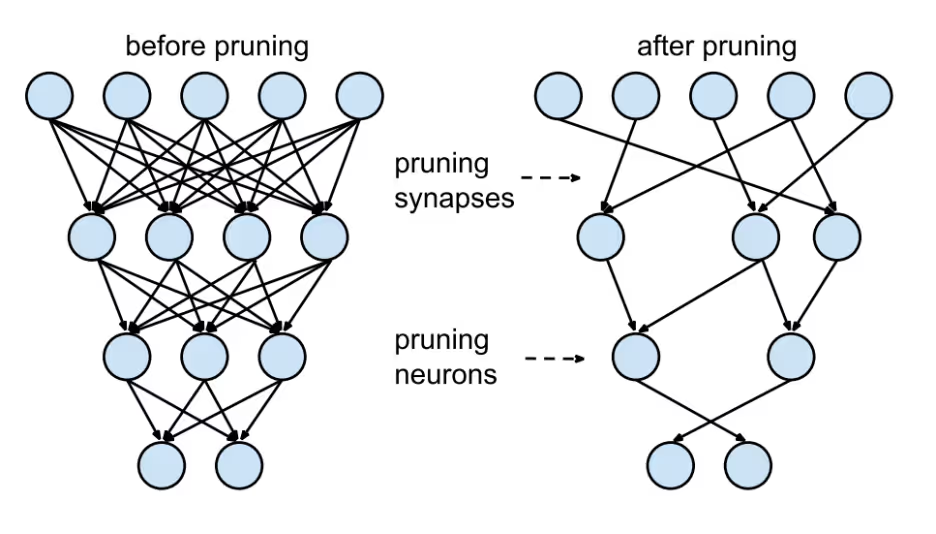

Pruning involves identifying and removing redundant or non-critical parameters (or connections) within the model’s neural network after it has been trained. This reduces the model’s size and computational load, often with minimal impact on its performance for its target tasks.

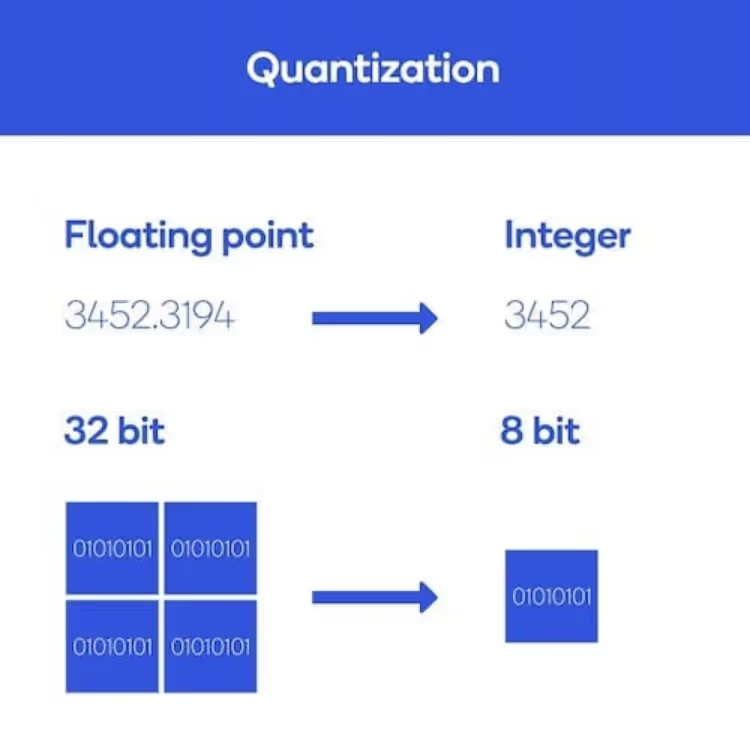

Quantization focuses on making the model more lightweight by reducing the numerical precision used to store the model’s parameters (weights). For example, instead of using 32-bit floating-point numbers, a model might be quantized to use 16-bit or even 8-bit integers. This cuts down on memory and storage requirements and makes low-precision inference much faster and more energy-efficient.

The SLM ecosystem is growing quickly, with major tech companies and the open-source community releasing highly capable models. Here are some well-known examples of small language models:

The availability of the best small language models gives enterprises the flexibility to choose models that best fit their specific needs. Let’s explore how SLMs power agentic AI.

One of the most exciting ways SLMs are making an impact is in agentic AI. This shift moves AI from simply answering questions to actually taking action and getting things done on its own.

So, what is agentic AI?

Agentic AI refers to a system that can handle tasks on its own. It can reason, plan, and execute multi-step tasks to achieve a specific outcome with little to no human intervention.

Instead of a single giant model trying to do everything, an agentic system acts as an orchestrator. It takes a big goal, breaks it down into smaller steps, and then assigns each step to the right agent. And very often, the engine powering each of those focused agents is a specialized small language model.

To understand where all of this is heading, it helps to have a roadmap. At the Agentic AI Summit, Patrick Buell outlined a multi-stage journey that businesses will likely take as they move toward this autonomous future. Below, we summarize Buell’s stages of the journey:

This is where most organizations are today, using AI to augment human work. The focus is on improving productivity by automating lower-value work so people can focus on the higher-value tasks.

This stage typically involves “max one to two agents,” often an AI app that summarizes your meeting notes or helps draft your emails. This is a perfect use case for an SLM, a small, fast, and efficient model dedicated to doing one thing exceptionally well.

The next step is to automate more complex workflows that involve a series of tasks. This is where an agent might take a process that used to be manual and handle it from start to finish. However, this stage maintains a “human-in-the-loop” approach, where a person provides the final approval before a critical action is taken.

For example, an agent powered by a compliance-focused SLM might flag a problematic clause in a contract, but it would wait for a lawyer to approve the change.

This is where things truly transform. Patrick explains that this stage requires us to rethink our entire business processes. Instead of just automating the old way of doing things, we create systems that focus entirely on results.

He describes this as moving toward “swarms of agents.” Thousands of specialized agents are all working together toward a single goal. Some agents might focus on security, others on ethics, and others on business efficiency.

Each agent in the swarm is a narrow specialist, making it the ideal job for a dedicated SLM. In this phase, humans act more like “community planners.” They set the goals and let the swarm figure out the best way to achieve them.

This is the final stage in the vision where the system becomes a “living organization” capable of self-design.At this point, the system might have tens or even hundreds of thousands of agents running. People would act more like a board of directors or research scientists, guiding the ecosystem and testing new ideas.

Agentic AI demands efficiency, flexibility, and control, which are areas where SLMs excel. Here’s how they enable scalable, secure, and responsive automation.

SLMs are already transforming industries by powering intelligent agents that automate complex workflows. Here are some real-world examples where SLMs get work done faster and smarter:

Next, let’s explore how Domo makes SLM deployment seamless.

Knowing that SLMs are a great fit is one thing. Having a platform actually to use them safely and effectively is another. At Domo, we give you the power to use the right model for the right task without sacrificing control with our hybrid AI strategy. We make it easy for you to bring your own model, including any SLM you want to use.

Catch up on the Agentic AI Summit replay to explore how SLMs can transform their enterprise AI strategy.