Saved 100s of hours of manual processes when predicting game viewership when using Domo’s automated dataflow engine.

Picture this: you’re a data architect whose mission is to set up a new AI tool that helps your company understand customer behavior better. The company’s excited about the potential to increase sales. But there’s a catch: you’re faced with a maze of rules, ethical concerns, and the risk of biased outputs. A single misstep could derail the whole project or even damage customer trust.

It’s an all too familiar situation for many data architects today: how do you adopt AI quickly while ensuring you’re doing it responsibly?

Many organizations are rushing into the world of artificial intelligence (AI) without a proper AI governance plan. This can leave them vulnerable to serious risks such as data breaches and severe regulatory fines.

Take, for example, the case of the remote tutoring company iTutor Group, which had to pay out a staggering $365,000 settlement due to ungoverned AI practices that lead to unlawful age discrimination.

But the good news? When done right, AI governance can actually speed up the adoption of AI, rather than slowing it down. It builds trust, reduces risk, and helps organizations scale their AI efforts with confidence.

In this article, we’ll provide you with a practical guide to AI governance. We’ll cover the key components, the steps to bring AI to life in your everyday work, and share a real-world use case.

With AI adoption on the rise, with 72 percent of organizations using it in at least one business function, it’s a great time to ask what does an AI governance framework really mean?

An AI governance framework is a way to manage AI systems throughout their lifecycle. It’s the set of processes, standards, and guardrails that ensure AI systems and tools operate safely and ethically.

As AI has moved well beyond testing, it’s become an integral part of our daily business operations, driving real business impact. But without a proper governance framework in place, AI can lead to challenges like fragmented learning, legal issues, and even risks to your brand’s reputation.

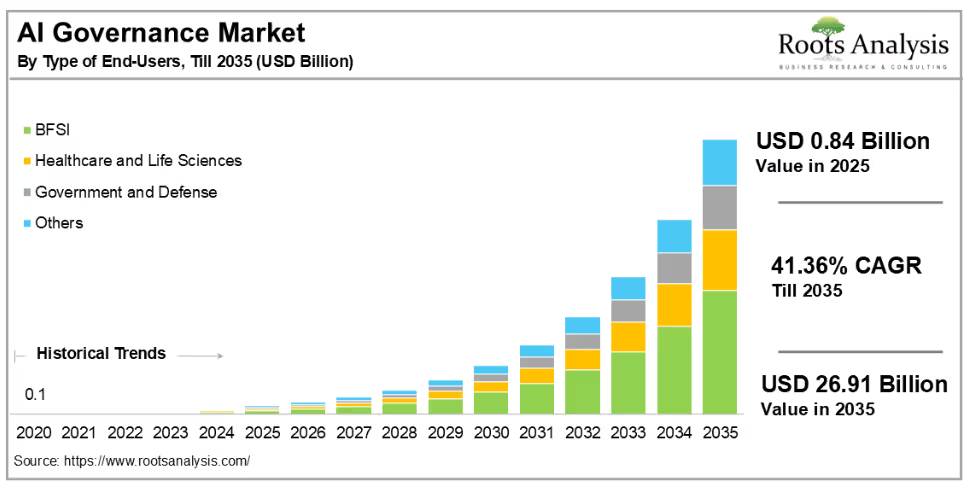

As these risks grow and challenges become more pressing, so does the demand for effective solutions. In fact, the global AI governance market is expected to reach $26.91 billion by 2035.This growth underscores how formal oversight and frameworks are becoming essential for responsible AI adoption, especially with strict regulations like the EU’s AI Act coming into effect.

Why should organizations like yours implement AI governance in the first place? Because it’s about building trust as you scale AI across your organization. Your AI governance becomes your safety net to help you move faster, not slower.

The whole point is to help organizations tap into AI’s potential without compromising their integrity.

Here’s what a solid governance framework will accomplish:

Governance guides AI development toward fairness, safety, and respect for human rights. This means going beyond technical performance. Organizations must ask: Who is this model serving? Who might it be leaving out? Ethical governance promotes transparency, accountability, and thorough audits of training data to prevent the reinforcement of bias.

Take Microsoft’s six responsible AI principles, which they created after early missteps (Tay), built on the NIST AI Risk Management Framework. These principles now guide how they design, test, and launch AI across every team and product.

Governance frameworks are designed to identify and address critical issues, such as biased outputs, compliance issues, and security vulnerabilities. Effective risk management is also crucial for achieving a positive return on investment (ROI) and helps you avoid “AI pilot purgatory.” This is the endless cycle where AI pilot programs never actually become real solutions.

Compliance is not optional. Local and international regulations are getting stricter, and non-compliance can result in substantial financial penalties. Under the EU AI Act, you could face fines of €40 million or 7 percent of your turnover.

Your governance framework becomes your compliance roadmap, ensuring your AI deployments stay legal from day one.

Good governance provides the structure to grow your AI initiatives with confidence. When stakeholders see that you are committed to ethical AI practices, trust follows. And trust? It helps you move fast, adapting AI without breaking things.

Integrating security and transparency into your AI from the start protects against risks and ensures a more robust system. This approach supports a reliable framework for sustainable AI growth.

But how do you build responsible AI into the foundation of your organization? There are some key components that have to fit together cohesively. Each one helps you evaluate AI systems for how well they comply with regulatory standards and align with your company goals.

Trust starts with understanding. When AI systems operate as black boxes, stakeholders lose confidence quickly. Your governance framework should prioritize transparency at every level.

To put this into practice, focus on the following elements:

Effective governance ensures your AI systems get managed properly, with consistent results you can count on and clear accountability when things go wrong.

AI systems must prioritize user safety and well-being. It’s about building technology that genuinely serves people’s interests beyond legal compliance.

Protecting AI systems from malicious activities maintains both trust and operational integrity. Security cannot be an afterthought.

AI systems should serve all users equitably, not just select groups. Bias in AI can create unfair outcomes that harm both personal relationships and business outcomes.

Now let’s take a look at some of the common challenges when putting AI governance into practice.

We know putting AI governance into practice may sound straightforward; we also know that the reality can be quite challenging. Many businesses face hurdles in three key areas: how their teams work together, the technology they have in place, and how they allocate their resources.

Below are some of the challenges.

The challenging part is not writing policies but getting your team to follow them. Employees resist new workflows when they are already comfortable with their current processes.

Change is hard and most organizations feel it. Around 70 percent of change programs fail, largely because employee’s aren’t on board and leadership doesn’t support fully support the process. This resistance creates “shadow AI,” where staff bypass IT approval and use unauthorized AI tools to get their work done faster.

Shadow AI creates serious problems. Data privacy becomes compromised. Security vulnerabilities multiply. Compliance goes out the window, and organizational trust erodes.

Most organizations face a fundamental mismatch: they are trying to implement modern AI governance on legacy infrastructure that was not designed for these demands.

Your current systems probably lack the processing power, storage capacity, and scalability required for current AI workloads. Integrating various AI applications with governance frameworks requires significant technical coordination and cross-team collaboration.

Building AI governance in-house is expensive and complex. It diverts budget and personnel from core business objectives while requiring specialized knowledge that many organizations lack.

The challenge is twofold: insufficient funding and a shortage of staff who understand both AI technology and governance requirements. This gap in expertise results in slower implementation and higher costs than expected.

Not every organization needs the same AI governance approach. The right framework depends on what your organization aims to achieve, its infrastructure, and the level of AI maturity.

Here’s how to choose the right one and tailor it to your organization’s needs:

Before selecting a framework, evaluate your organization’s current state. This evaluation identifies governance gaps and sets realistic implementation priorities.

Governance requirements vary across sectors. Consider:

Several widely respected AI governance frameworks offer structure and best practices:

When choosing or customizing a framework, prioritize:

With a framework chosen, let’s explore practical steps to operationalize AI governance effectively.

Creating a governance framework is one thing, but getting it to function effectively is where many organizations face challenges. The gap between theory and implementation hinders even experienced teams.

Here are the practical steps that make governance work without overwhelming your team.

AI governance is a necessity, as shown by leading organizations across sectors. The use cases below demonstrate how companies have effectively implemented AI governance frameworks in practice.

The 2016 Tay bot incident quickly became problematic for Microsoft after it interacted with users online. Microsoft used this as an opportunity to improve its approach to responsible AI.

Their response created a comprehensive governance program:

IBM recognized that AI bias impacts entire industries, not just individual companies. Instead of creating solutions solely for internal use, they chose to address this challenge through open collaboration.

Mastercard processes billions of transactions globally, making AI governance essential for both security and customer trust. Their fraud detection systems handle sensitive financial data, which requires governance that goes beyond basic compliance.

We’re entering a new era where AI’s promise comes with real accountability. The organizations that thrive will be those who see governance not as a hurdle, but as a catalyst for trust, agility, and growth.

Building effective AI governance doesn’t have to slow you down. With clear frameworks, practical steps, and a focus on transparency and fairness, you can unlock AI’s value while protecting your business and customers.

As you look to scale AI within your organization, thoughtful governance is what turns innovation into impact. If you’re ready to go deeper, check out the Future of AI limited series or check out our Security and Privacy FAQ for more details about how Domo thinks about AI governance.